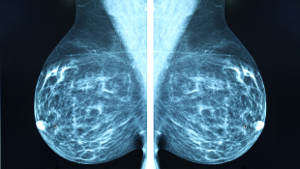

Artificial intelligence: Aiding, not replacing radiologists

A $1 million challenge using Kaiser Permanente data shows how AI can assist in breast cancer screening

By Diana S. M. Buist, PhD, MPH, Kaiser Permanente Washington Health Research Institute Senior Investigator and Director of Research and Strategic Partnerships

It’s only March and already 2020 is a big year for artificial intelligence (AI) tools for breast cancer screening. I’m excited to announce our publication in JAMA Network Open with results from a challenge with a $1 million purse. The international crowdsourced contest was to generate open-source code for the scientific, industrial, and regulatory communities to use to improve breast cancer detection.

A DREAM team

DREAM Challenges are organized by scientists from academia, industry, government, and nonprofit organizations. The 2017 International Digital Mammography DREAM Challenge (which stands for “Dialogue for Reverse Engineering Assessment and Methods”), funded by the Laura and John Arnold Foundation, was organized by Sage Bionetworks, IBM Research, Icahn School of Medicine at Mount Sinai, and Kaiser Permanente Washington Health Research Institute.

We invited teams from across the globe to develop open-source machine-learning algorithms to improve mammography interpretation. Current U.S. practice is that radiologists look at mammograms to identify lesions, or unusual features, indicating potential cancer. The challenge asked AI developers to create algorithms that do the same: Find patterns in images that are associated with cancer. The algorithms were trained using many mammograms with and without cancerous lesions, with the goal of improving the predictive accuracy of digital mammography for detecting breast cancer early.

The reason for this goal? Of the 40 million annual U.S. screening mammograms, 9-10% result in patients being asked to return for more diagnostic testing. Only about 5% of these recalls result in a breast cancer diagnosis. The false positives lead to unnecessary tests or even cancer treatment and contribute to the annual $10 billion in U.S. mammography costs.

Kaiser Permanente Washington supported the crowdsourced DREAM Challenge by providing more than 144,000 anonymized screening mammogram images from more than 80,000 people, with 952 breast cancers. Images were linked to data on risk factors from the Breast Cancer Surveillance Consortium, a national network of breast imaging registries.

DREAM Challenge competitors included 1,100 computer scientists, radiologists, students, and others in 126 teams from 44 countries. The challenge used an innovative approach that limited the release of de-identified digital images. Competitors were provided only a small number of images to develop their AI algorithms. They submitted refined algorithms to test on a larger dataset that was kept secure. The top 8 performing teams were invited to collaborate on a combined AI model that improved on individual model performance.

DREAM results

The international challengers did not create an AI algorithm that could meet or beat radiologists’ performance. However, combining an AI algorithm with radiologist assessment might improve accuracy. Our results suggest supplementing single-radiologist reading with AI could prevent half a million Americans from having unnecessary follow-up tests after an annual screening mammogram. It’s important to state that we did not evaluate how AI could be added to care or if adding it would actually lead to better accuracy.

The details are in our paper and appendix, but here’s what we found:

- No single AI algorithm outperformed U.S. radiologists.

- But an ensemble of algorithms combined with single-radiologist assessment improved overall accuracy, at least in our challenge.

- So adding AI to current U.S. mammography practice might improve breast screening performance, reduce costs, and relieve radiologist workload.

However, lessons from mammography with computer-assisted detection tell us that we need more research on implementing and evaluating a combined approach of AI and radiologist assessment.

Learning about AI and health care

We learned a lot in this project about developing AI for real-world health care.

- Our approach allowed open-science AI development while protecting health data with a firewall. This strategy could balance data access and security in future AI health projects.

- In a promising direction for future AI, some DREAM Challenge teams developed algorithms that performed well in both predicting future cancer and finding specific cancer lesions.

- Having experts who know the data and clinical nuances is essential in AI projects. Without this expertise in this DREAM challenge, we might have asked questions with less clinical relevance or that risked data misinterpretation.

And more cancer-screening AI is coming

In January, Google scientists published that their AI system improved breast screening accuracy over U.S. and U.K. radiologists. This field is moving fast so issues that all of us working on AI for cancer screening must consider now are:

Preventing overdiagnosis. Any screening carries risks of false positives leading to unnecessary tests and treatment. Introduction of new breast screening tools must ask: Are they reducing this problem?

Ensuring generalizability. AI must be accurate for all patients. We must ask: Do the algorithms we develop account for screening history, genetics, physiology such as breast density, and demographics?

Keeping up with AI. AI tools need constant refinement. Think about how Tesla continually updates software and knowledge, for example, on avoiding accidents. Health care AI also needs to continually learn and improve. How will U.S. Food and Drug Administration-approved health AI tools keep up with algorithm iterations?

Our study shows that stories about AI replacing health professionals are exaggerated. We found that AI enhanced a radiologist’s experience and could make U.S. mammography safer for women and more efficient for delivery systems.

cancer

Deep machine learning: Can it lead to cancer screening improvements?

A nonprofit, open-science organization offers $1.2 million for advances that increase accuracy.

Researcher profile

What drives Diana Buist to study breast cancer screening?

Kaiser Permanente Share profiles longtime KPWHRI investigator and director of research and strategic partnerships.

Read it in News and Events.

mental health

Suicide risk prediction is not a self-driving car

Nice warning light,’ Dr. Greg Simon says, ‘but my hands stay on the wheel,’ balancing models with judgment.